LYCB

🧥LYCB: Leave Your Clothes Behind

Keifer Lee, Shubham Gupta, Karan Sharma

Report

Abstract

The demand for assets in the virtual world as recently gained a lot of attention. We present a novel framework dubbed LYCB: Leave Your Clothes Behind that allows users to directly generate a 3D mesh object of garments from a monocular video using Neural Radiance fields. The proposed method fills the gap in literature by addressing issues such as accurately fitting on to foreign bodies for virtual try-ons and model complex cloth properties using Neural Radiance fields and physics-based simulations. This lets us transfer clothing type and bodies easily. Our code is made available at https://github.com/IamShubhamGupto/LYCB

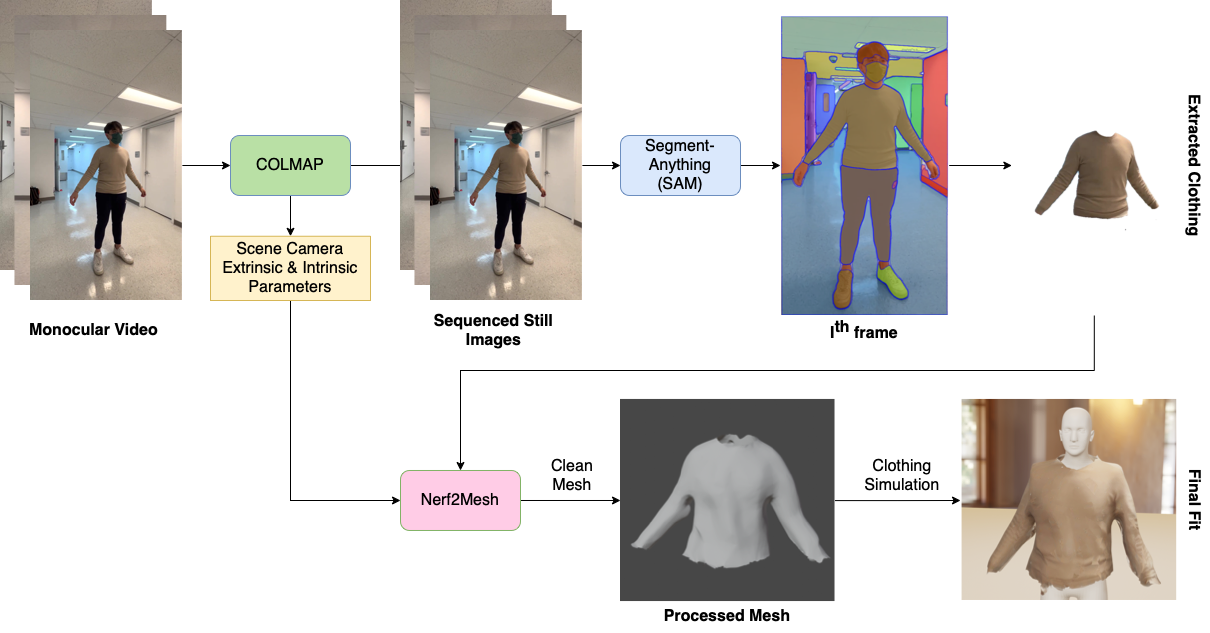

Pipeline of Leave Your Clothes Behind (LYCB)

Results & Sample Weights

Below is an illustration of the input / output at each stage of the pipeline with custom data.

Sample data at each stage. From left to right - Monocular input sequence, SAM extracted mask, NeRF2Mesh reconstructed mesh and test-fit with cloth simulation in Blender

Sample data used in illustration above and the corresponding trained implicit model and reconstructed mesh can be found here

Getting Started

Installation

For LYCB, NeRF2Mesh's dependencies and Segment-Anything are key pre-requisites. Optionally, if you would like to use your own data (without prepared camera parameters), COLMAP will be required as well.

#Installing key requirements

#NeRF2Mesh

pip install -r requirements.txt

pip install git+https://github.com/NVlabs/tiny-cuda-nn/#subdirectory=bindings/torch

pip install git+https://github.com/NVlabs/nvdiffrast/

pip install git+https://github.com/facebookresearch/pytorch3d.git

#SAM

pip install git+https://github.com/facebookresearch/segment-anything.git

For COLMAP installation, check out https://colmap.github.io/install.html

Running LYCB

- If you are starting from a raw video (e.g. MP4) file or set of images without known camera parameters, then you will have to run

COLMAPfirst to generate said parameters. Note: Dataset will need to be in the format of nerf-synthetic or MIP-NeRF 360's dataset. Also please do run COLMAP on the raw images (e.g. not masked clothing images) to ensure proper feature matching with COLMAP.

#Example for video

python scripts/colmap2nerf.py --video ./path/to/video.mp4 --run_colmap --video_fps 10 --colmap_matcher sequential

#Example for images

python scripts/colmap2nerf.py --images ./path/to/images/ --run_colmap --colmap_matcher exhaustive

- Assuming that you the corresponding dataset (e.g. images and

transforms.json) prepared, next you will have to runSAMto extract the clothing.

- Make sure to keep the sequence of input-output images in the same order to ensure that the information within

transforms.jsoncorresponds to the right frame index. - Additionally, SAM by default is able to generate up to 3 masks per input with its multi-mask option, which will be very handy in isolating the clothing of interests only. By default the extraction script will select the highest scoring mask amongst the 3 generated mask, but you may have to fiddle around with the script to ensure that only the clothing item of interest is extracted consistently across all frames; could consider adding support to automatically do said matching in the futuer.

- Lastly, the extraction script assumes that the target (e.g. clothing of interest) is consistently held at the frame center consistently across all frames; feel free to fiddle around the positive and negative keypoints within the extraction script to change the expected target focal point as required.

#Extract target clothing with SAM; checkout extraction.py for more args / options

python extraction.py --data /path/to/images \

--output /output/path \

--enable_neg_keypoints

- Once the target clothing has been extracted and verified (e.g. extractions are consistent and without occlusion), proceed repack the data according to the chosen dataset format; for e.g.

#E.g. nerf-synthetic format

- dataset_root

|_ images/

|_ img_0001.png

|_ img_0002.png

|_ ...

|_ transforms.json

- Generate neural-implicit representation and subsequently reconstruct mesh from queried points. For more information, advanced options and tip, checkout the original NeRF2Mesh implementation here. Note that you will probably have to fine-tune the parameters here according to your scene / object.

#Stage 0 | Fit radiance field, perform volumetric rendering and extract coarse mesh

python nerf2mesh.py path/to/dataset --workspace testrun --lambda_offsets 1 --scale 0.33 --bound 3 --stage 0 --lambda_tv 1e-8 --lambda_normal 1e-1 --texture_size 2048 --ssaa 1 #Enforce coarser texture_size and limit SSAA for headless rendering

#Stage 1 | Fine-tune coarse model, generate mesh, rasterize, and clean

python nerf2mesh.py path/to/dataset --workspace testrun --lambda_offsets 1 --scale 0.33 --bound 3 --stage 1 --lambda_normal 1e-1 --texture_size 2048 --ssaa 1

-

Clean up generated mesh with any method / software of your choice as required - e.g. MeshLab. Now you have the final 3D mesh of the piece of clothing you so desired!

-

[Optional] Run clothing simulation to perform virtual try-on on desired target with the software of your choice; note that you will have to provide your own target try-on body and rig it (if applicable) accordingly. For our demo, we used Blender for the clothing simulation and a simple non-descriptive male dummy model as the try-on target.

-

All done!

Acknowledgements & References

The project is built on top of ashawkey's PyTorch implementation of NeRF2Mesh here.

#NeRF2Mesh

@article{tang2022nerf2mesh,

title={Delicate Textured Mesh Recovery from NeRF via Adaptive Surface Refinement},

author={Tang, Jiaxiang and Zhou, Hang and Chen, Xiaokang and Hu, Tianshu and Ding, Errui and Wang, Jingdong and Zeng, Gang},

journal={arXiv preprint arXiv:2303.02091},

year={2022}

}

#ashawkey's PyTorch implementation

@misc{Ashawkey2023,

author = {Ashawkey},

title = {nerf2mesh},

year = {2023},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/ashawkey/nerf2mesh}}

}

#COLMAP

@inproceedings{schoenberger2016sfm,

author={Sch\"{o}nberger, Johannes Lutz and Frahm, Jan-Michael},

title={Structure-from-Motion Revisited},

booktitle={Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2016},

}

@inproceedings{schoenberger2016mvs,

author={Sch\"{o}nberger, Johannes Lutz and Zheng, Enliang and Pollefeys, Marc and Frahm, Jan-Michael},

title={Pixelwise View Selection for Unstructured Multi-View Stereo},

booktitle={European Conference on Computer Vision (ECCV)},

year={2016},

}

#Segment-Anything (SAM)

@article{kirillov2023segany,

title={Segment Anything},

author={Kirillov, Alexander and Mintun, Eric and Ravi, Nikhila and Mao, Hanzi and Rolland, Chloe and Gustafson, Laura and Xiao, Tete and Whitehead, Spencer and Berg, Alexander C. and Lo, Wan-Yen and Doll{\'a}r, Piotr and Girshick, Ross},

journal={arXiv:2304.02643},

year={2023}

}

LICENSE

This project is MIT Licensed